Most of the major announcements so far in 2019 focused on 5G, the next network technology development. Most semiconductor companies jumped onto the 5G bandwagon, making it easier for smartphone OEMs to manufacture phones that can take benefit from the network infrastructure changes.

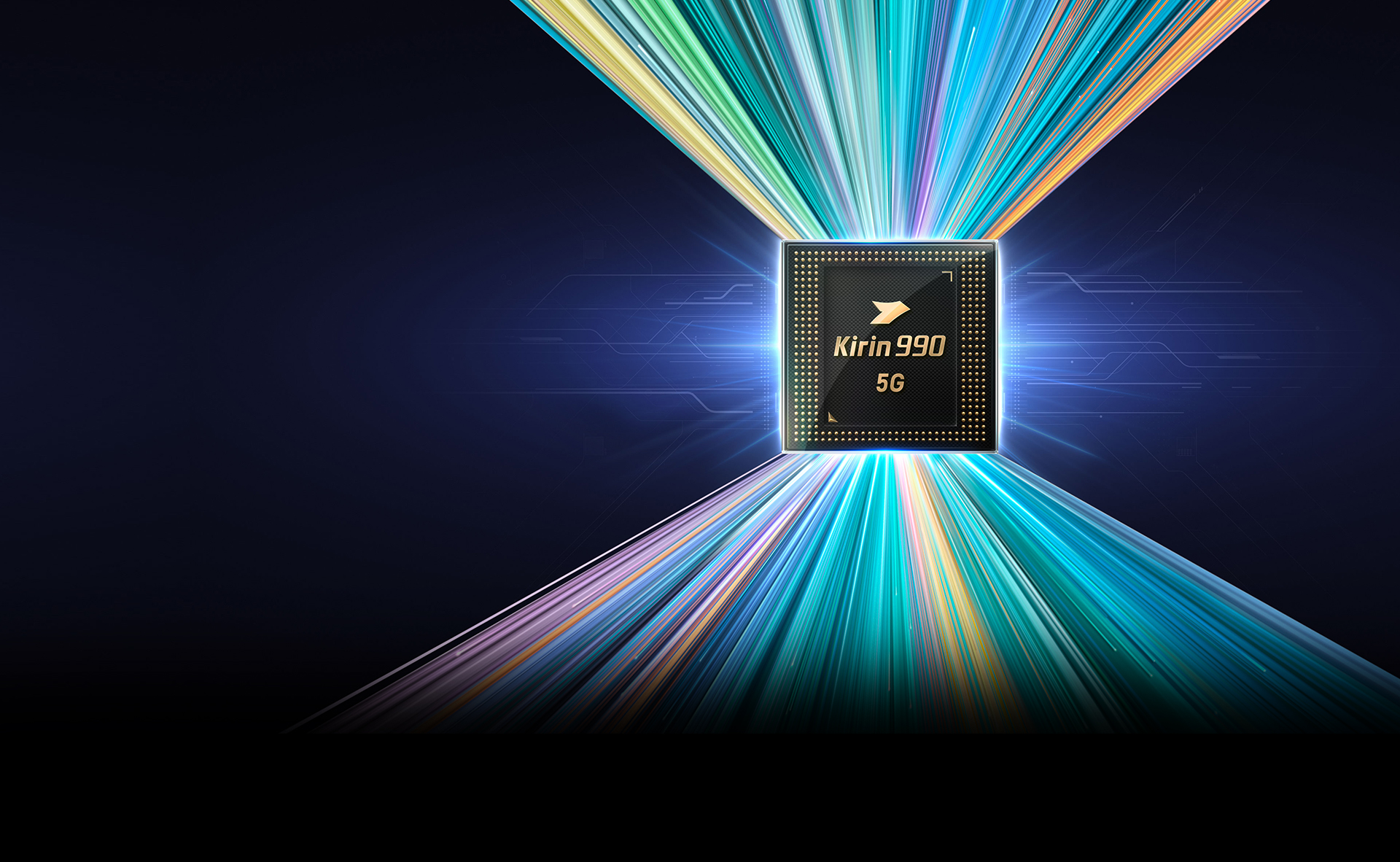

Most 5G-enabled SoCs released to date, however, were basically 4G chips with an extra 5G modem to allow 5 G functionality. Samsung just announced the Exynos 980 SoC with an embedded 5G modem, and now, with its own 5G integrated flagship SoC, Huawei is right after it. Meet Huawei’s HiSilicon Kirin990 5 G, released at IFA 2019 alongside the Kirin 990.

The HiSilicon Kirin 990 5 G processor has just been disclosed officially and some incredible benchmarks already appear to be set. A “Huawei Dev Phone” was tested on AI Benchmark and the results are amazing for those interested in smartphone AI capabilities.

AI Benchmark claims devices are put through a barrage of 21 tests, including object recognition, photo enhancement, memory limits, and even playing classic Atari games.

In the performance ranking for phones, the Huawei Dev Phone has a substantial lead over its rivals. The AI score of 76,206 is more than double the 35,130 points managed by the Honor 20S.

Other noted competitors also fall behind: Asus ROG Phone II – 32,727 points; Samsung Galaxy Note 10+ – 29,055 points; Sony Xperia 1 – 26,672 points; OnePlus 7 Pro – 25,720 points. The Huawei Dev phone, which apparently comes with 8 GB RAM and operates on Android 10, is classed as a prototype but it could possibly be a Mate 30 Pro.

Similar results can be found in the performance ranking for mobile SoCs, with the HiSilicon Kirin 990 5G dominating the field with a score of 52,403. The Snapdragon 855 Plus falls behind on 24,652 points and the Exynos 9825 Octa struggles to keep up on 17,514 points.

Even the Unisoc Tiger T710 is beaten, with its impressive score of 28,097 points fading in comparison to the Kirin 990 5G’s outstanding NPU performance. At least Huawei’s claim of the new SoC offering the most powerful AI seems justified in this case.

Leave a Reply